Workshop on Brain-inspired Hardware

The recent surge of interest in Deep Learning (DL) shows that the human brain has stimulated much fruitful AI research. However, different AI researchers have been inspired by the brain in a wide range of ways. In most AI applications, DL is used simply because it constitutes an effective learning technique, but without using any strong analogy to how processes work in the brain. In this workshop, we aim to make advances in brain-inspired computation, by exploring how approaches that employ a closer analogy with the functions of the brain can facilitate a move towards novel architectures and hardware. We believe that this direction of work will act as a driver for future breakthroughs in AI.

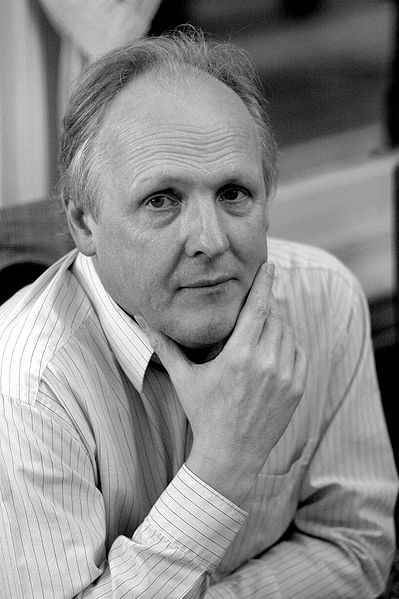

Professor Steve Furber of University of Manchester gives a keynote speech on his project 'SpiNNaker'.

Date and Venue

- Data: March 30th, 2017

- Venue: AIST Tokyo waterfront Annex building. 11th floor.

Keynote Speech

The SpiNNaker Project

Prof. Steve Furber, The University of Manchester

Abstract:

The SpiNNaker (Spiking Neural Network Architecture) project aims to produce a massively-parallel computer capable of modelling large-scale neural networks in biological real time. The machine has been 18 years in conception and ten years in construction, and has so far delivered a 500,000-core machine in six 19-inch racks, and now being expanded towards the million-core full system. Although primarily intended as a platform to support research into information processing in the brain, SpiNNaker has also proved useful for Deep Networks and similar applied Big Data applications. In this talk I will present an overview of the machine and the design principles that went into its development, and I will indicate the sort of applications for which it is proving useful.

Bio:

Steve Furber CBE FRS FREng is ICL Professor of Computer Engineering in the School of Computer Science at the University of Manchester, UK. After completing a BA in mathematics and a PhD in aerodynamics at the University of Cambridge, UK, he spent the 1980s at Acorn Computers, where he was a principal designer of the BBC Microcomputer and the ARM 32-bit RISC microprocessor. Over 85 billion variants of the ARM processor have since been manufactured, powering much of the world's mobile and embedded computing. He moved to the ICL Chair at Manchester in 1990 where he leads research into asynchronous and low-power systems and, more recently, neural systems engineering, where the SpiNNaker project is delivering a computer incorporating a million ARM processors optimised for brain modelling applications.

Time Table

| 10:00 - 10:05 |

Opening Remarks Junichi TSUJII, Director, AIRC at AIST, Japan & Professor, University of Manchester, UK |

|---|---|

| 10:05 - 10:40 |

Neuromimetics in silicon neuronal networks Takashi KOHNO, Associate Professor, The University of Tokyo, Japan |

| 10:40 - 11:15 |

Time-domain analog computing and VLSI systems toward ultimately high-efficient brain-like hardware

[ Slides ] Takashi MORIE, Professor, Kyushu Institute of Technology, Japan |

| 11:15 - 11:50 |

Rise of Deep Neural Network Accelerators

Masato MOTOMURA, Professor, Hokkaido University, Japan |

| 11:50 - 12:25 |

Why do we need "brain inspired computing? Yuichi NAKAMURA, ph.D, NEC Corp., Japan Takashi TAKENAKA, ph.D, NEC Corp., Japan |

| lunch break | |

| 13:30 - 14:30 |

Keynote Speech: The SpiNNaker Project Steve Furber, Professor, The University of Manchester |

| 14:30 - 15:05 |

A hardware simulator of visual nervous systems utilizing a silicon retina and SpiNNaker chips

Hirotsugu OKUNO, Assistant Professor, Osaka Institute of Technology |

| break | |

| 15:20 - 15:55 |

Cat-scale artificial cerebellum on an energy-efficient supercomputer Shoubu

Tadashi YAMAZAKI, Assistant Professor, The University of Electro-Communications, Japan & Visiting Scholar, AIRC at AIST, Japan |

| 15:55 - 16:30 |

Modeling an Embodied Learning Human Brain with Spiking Neurons and a Cortical Structure

Yasuo KUNIYOSHI, Professor, The University of Tokyo & Visiting Scholar, AIRC at AIST, Japan |

| break | |

| 16:45 - 17:30 | Discussion |

| Closing | |

| 18:00 - 19:00 | Further Discussion (Invitation only) |

Organising Committee

Prof. Kazuyuki AIHARA, The University of Tokyo Prof. Shin ISHII, Kyoto University Prof. Tadashi YAMAZAKI, The University of Electro-Communications Dr. Jun-ich TSUJII , National Institute of Advanced Industrial Science and Technology Dr. Hidemoto NAKADA , National Institute of Advanced Industrial Science and Technology

Registration

Details

Neuromimetics in silicon neuronal networks

Takashi KOHNO, Associate Professor, The University of Tokyo, Japan

Time-domain analog computing and VLSI systems toward ultimately high-efficient brain-like hardware Takashi MORIE, Professor, Kyushu Institute of Technology, Japan

Rise of Deep Neural Network Accelerators Masato MOTOMURA, Professor, Hokkaido University, Japan

Why do we need "brain inspired computing"? Yuichi NAKAMURA, Ph.D, NEC Corp., Japan

Takashi TAKENAKA, Ph.D, NEC Corp., Japan

A hardware simulator of visual nervous systems utilizing a silicon retina and SpiNNaker chips Hirotsugu OKUNO, Assistant Professor, Osaka Institute of Technology, Japan

Cat-scale artificial cerebellum on an energy-efficient supercomputer Shoubu Tadashi YAMAZAKI, Assistant Professor, The University of Electro-Communications, Japan & Visiting Scholar, AIRC at AIST, Japan

Modeling an Embodied Learning Human Brain with Spiking Neurons and a Cortical Structure Yasuo KUNIYOSHI, Professor, The University of Tokyo & Visiting Scholar, AIRC at AIST, Japan

Abstract: Silicon neuronal network aims to realize an artificial nervous system by constructing a network of electronic circuit compoments that reproduce the electrophysiological activities of the neuronal cells and synapses. This approach is a trial to realize a next-generation information processing system that inherits the brain's advantageous characteristics such as autonomy, adaptivity, robustness, low-latency, and high power-efficiency. Completely replicating a brain seems to be a solid approach, however, electronic circuits that elaborate the neuronal activities generally require high power because the basic characteristics of proteins and transistors are incompatible. We propose an approach to challenge this problem by exploiting the techniques of the qualitative neuronal modeling, which reproduces the dynamical structures in the neuronal activities by simple electronic circuits.

Bio:Dr. Takashi Kohno received the B.E. degree in medicine and the Ph.D. degree in mathematical engineering from University of Tokyo, Japan, in 1996 and 2002, respectively. He worked on medical care information systems in Hamamatsu University School of Medicine for two years. Then he took the job of a group leader in Aihara Complexity Modeling Project, Japan Science and Technology Agency (JST), Japan. He is an Associate Professor in Institute of Industrial Science, University of Tokyo since 2006. His research interests include the modeling of spiking neuronal networks based on the nonlinear dynamics and silicon neural networks, electronic-circuit versions of the nerve system.

Time-domain analog computing and VLSI systems toward ultimately high-efficient brain-like hardware Takashi MORIE, Professor, Kyushu Institute of Technology, Japan

Abstract:

Time-domain analog computing based on spiking neuron models and its VLSI implementation are proposed. Weighted-sum or multiply-and- accumulate (MAC) calculation, which is crucial in brain-like computing, can be performed with extremely low energy in a crossbar array architecture using transient operation of RC (resistance and capacitance) circuits. A CMOS VLSI implementation based on the architecture is shown as a proof-of- concept. To achieve ultimately high-efficient brain-like VLSI systems, development of analog memory devices with very high resistance is needed, which might be realized using nanotechnology. Utilizing noise effectively in such devices is also essential, and some promising approaches are discussed.

Bio:

Takashi Morie received the B.S. and M.S. degrees in Physics from Osaka University, Osaka, Japan, and the Dr. Eng. degree from Hokkaido University, Sapporo, Japan, in 1979, 1981 and 1996, respectively. From 1981 to 1997, he was a member of the Research Staff at Nippon Telegraph and Telephone Corporation (NTT). From 1997 to 2002, he was an associate professor of the department of electrical engineering, Hiroshima University, Higashi-Hiroshima, Japan. Since 2002 he has been a professor of Graduate School of Life Science and Systems Engineering, Kyushu Institute of Technology, Kitakyushu, Japan. His main interest is in the area of VLSI implementation of brain-like systems and related new functional devices.

Rise of Deep Neural Network Accelerators Masato MOTOMURA, Professor, Hokkaido University, Japan

Abstract:

Thanks to the enormous progress and success of deep neural networks (DNNs), computer architecture research has been regaining its past "excitement" again recently: lot's of architectural proposals based on vastly/slightly different approaches have been proposed for the accelerated execution of the training/inference of DNNs. This talk will try to give insights on 1) why they are happening now, 2) what are the recent findings in this movement, and 3) where this architectural innovation will be heading.

Bio:

Masato Motomura received B.S., M.S. in physics and Ph. D. in EE in 1985, 1987, and 1996, respectively, all from Kyoto University. He had been with NEC from 1987 to 2011, where he was engaged in research and business development of dynamically reconfigurable hardware and on-chip multi-core processors. Now a professor at Hokkaido University, his current research interests include reconfigurable and parallel architectures for deep neural networks and machine learning. He won the IEEE JSSC Annual Best Paper Award in 1992, IPSJ Annual Best Paper Award in 1999, and IEICE Achievement Award in 2011, respectively. He is a member of IEICE, IPSJ, and IEEE.

Why do we need "brain inspired computing"? Yuichi NAKAMURA, Ph.D, NEC Corp., Japan

Takashi TAKENAKA, Ph.D, NEC Corp., Japan

Abstract:

Various social problems would be occurred in various areas, for example, crimes, luck of resources, broken of infrastructure and etc. In the cases, an integration of ICT (Information and Communication Technology) and AI algorithm is one of the good methods to solve such the problems. However it would take long time to analyze complicated AI algorithm, recognition, deep learning, reasoning, or etc., by general processors, because of the limit of Moore's law. We think a concept of brain inspired computing is introduced to enhance speed of AI algorithm processing. In this talk, I present 1) limit of conventional processing systems, 2) challenge for efficient AI processing, and 3) expectation for brain inspired computing.

Bio: Yuichi NAKAMURA

Yuichi Nakamura received his B.E. degree in information engineering and M.E. degree in electrical engineering from the Tokyo Institute of Technology in 1986 and 1988, respectively. He received his PhD. from the Graduate School of Information, Production and Systems, Waseda University, in 2007. He joined NEC Corp. in 1988 and he is currently general manager at System Platform Research Labs., NEC Corp. He is also a guest professor of National Institute of Informatics and the chair of IEEE CAS Japan Joint Chapter. He has more than 25 years of professional experience in electronic design automation, network on chip, signal processing, and embedded software development.

Bio: Takashi TAKENAKA

TAKENAKA, Takashi received his M.E. and Ph.D. degrees from Osaka University in 1997 and 2000 respectively. He joined NEC Corporation in 2000 and is currently a senior principal researcher of NEC Corporation. He was a visiting scholar of the University of California, Irvine from 2009 to 2010. His current research interests include system accelerators, system-level design methodology, and high-level synthesis. HHe has also served on organizing and program committees of several premier conferences including ASP-DAC and DAC. He is also a member of IEEE, IEICE and IPSJ.

A hardware simulator of visual nervous systems utilizing a silicon retina and SpiNNaker chips Hirotsugu OKUNO, Assistant Professor, Osaka Institute of Technology, Japan

Abstract:

To understand the functional roles of visual neurons in the retina and the visual cortex, responses of a visual neuronal network under natural visual environments should be investigated. In this talk, I will introduce a hardware simulator for reproducing neural activities in the retina and the visual cortex with the following features: real-time reproduction of neural activities with physiologically feasible spatio-temporal properties and configurable model structure. To achieve both real-time simulation and configurability, we employed a mixed analog-digital architecture with multiple parallel processing techniques. The system was composed of a silicon retina with analog resistive networks, a field-programmable gate array, and SpiNNaker chips. The emulation system was successful in simulating a part of the retinal and cortical circuits at 200 Hz.

Bio:

Hirotsugu Okuno received the B.S. and the M.S. degrees in electronic engineering from Osaka University. After he worked for Kansai Electric Power, he entered the Graduate School of Engineering, Osaka University, where he received the Ph.D degree in electrical, electronic and information engineering in 2008. From 2008 to 2016, he was an Assistant Professor at the Graduate School of Engineering, Osaka University. Currently, he is an Assistant Professor at the Faculty of Information Science and Technology, Osaka Institute of Technology. His research interests include visual signal processing in biological systems and their applications in robotics.

Cat-scale artificial cerebellum on an energy-efficient supercomputer Shoubu Tadashi YAMAZAKI, Assistant Professor, The University of Electro-Communications, Japan & Visiting Scholar, AIRC at AIST, Japan

Abstract:

We have built a realistic cerebellar model based on the large amount of anatomical and physiological data. The model consists of more than 1 billion leaky integrate-and-fire neurons, which is comparable to the whole cerebellum of a cat, and performs a supervised learning for spatiotemporal signals called reservoir computing. It is implemented on an energy efficient supercomputer Shoubu with 1,280 PEZY-SC processors. We employed several PEZY-SC specific techniques for efficient simulation, and invented a new technique to reduce the amount of inter-node communications for spike information exchange. Eventually, we achieved realtime simulation, i.e., a computer simulation of the model for 1 s completes within 1 s of the real world with temporal resolution of 1 ms. The realtime simulation capability would be indispensable for various engineering applications that requires realtime signal processing and motor control, such as adaptive robotics.

Bio:

Tadashi Yamazaki received the B.E. degree in computer science from The University of Electro-Communications, Tokyo, Japan, in 1996, and the M.E. and Ph.D. degrees in computer science from the Tokyo Institute of Technology, Tokyo, Japan, in 1998 and 2002, respectively. From 2002 to 2012, he was a Research Scientist with the RIKEN Brain Science Institute. Since 2012, he has been an Assistant Professor with the Graduate School of Informatics and Engineering, The University of Electro-Communications. His research interests include theoretical/computational modeling of the cerebellum, brain-style artificial intelligence, high-performance neurocomputing, and adaptive robotics.

Modeling an Embodied Learning Human Brain with Spiking Neurons and a Cortical Structure Yasuo KUNIYOSHI, Professor, The University of Tokyo & Visiting Scholar, AIRC at AIST, Japan

Abstract:

We constructed a computer model of an early human brain, embedded in a precise physical model of a musculo-skeletal body. The brain model consists of LIF (Leaky Integrate and Fire) type spiking neurons with STDP (Spike Timing Dependent Plasticity) learning rules. They are distributed on a 3D neocortex model whose local areas are inter-connected with regard to tractography data obtained by DTI (Diffusion Tensor Imaging) of human neonates. In embodied learning simulations, the brain model acquired body map representations and visual-somatosensory integration in normal embodiment (in-utero) conditions but not in atypical (ex-utero) conditions. These results indicate the importance of information structuring via embodiment for early development.

Bio:

Yasuo Kuniyoshi received Ph.D. from The University of Tokyo in 1991 and joined Electrotechnical Laboratory, AIST, MITI, Japan. From 1996 to 1997 he was a Visiting Scholar at MIT AI Lab. In 2001 he was appointed as an Associate Professor and then full Professor in 2005 at The University of Tokyo. He is also the Director of RIKEN BSI-Toyota Collaboration Center as well as the Leader of MEXT Grant-in-Aid for Scientific Research on Innovative Areas "Constructive Developmental Science" since 2012, and the Director of Next Generation AI Research Center of The University of Tokyo since 2016.